인공신경망 설계 시 고려사항 Network topology 네트워크의 모양 (feed forward, feed backward) Activation function 출력의 형태 Objectives 분류? 회귀? Loss function, Error로 나타낼 수 있음 Optimizers weight update Generalization Overfitting 방지 2. activation function 출력의 형태 결정 1. one-hot vector 여러 값 중 하나의 값만 출력 ex_ 숫자 식별 2. softmax function 해당 출력이 나올 확률로 표현 3. objective function 기타 목적함수 Mean absolute error / mae Mean absolute percentag..

깊어진 인공신경망의 문제점 Generalization : training에 사용되지 않은 data에 대한 성능 Data set Training set training에 사용하는 data set Validation set 주어진 data set 중 빼놓았다가 성능을 검증할 때 사용하는 data set Test set 주어지지 않았던 접한 적 없는 data set 학습이 training set으로 진행되기 때문에, 학습을 반복할 수록 training set에 대한 정확도는 높아지고 오류율은 낮아진다. 하지만 validation set에 대한 오류율은 낮아지다가 높아지는 현상을 띄는데, 이는 학습이 너무 training set에만 적합하게 되었기 때문이다. 이를 training set에 overfitting(..

deep neural network에서의 backpropagation backpropagation 원래 backpropagation(역전파)는 학습 방식이 아니라 perceptron에서 loss function의 기울기를 계산하는 알고리즘을 뜻하지만, 넓은 의미에서는 기울기를 이용한 학습 방식으로 쓰인다. 참고 : https://en.wikipedia.org/wiki/Backpropagation delta rule https://en.wikipedia.org/wiki/Delta_rule delta rule의 정의와 증명 delta rule은 backpropagation 방법 중 하나이다. delta rule은 왜 multi-layer에서 사용할 수 없는가... 본론5에서와 같이 처음에는 신경망학습에서 d..

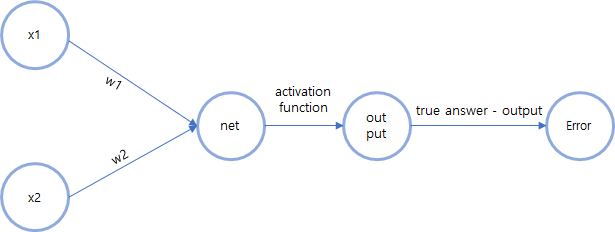

theta가 필요한 이유 위의 그림은 theta가 없을 때의 연산을 나타낸 것이다. 이 때 활성화 함수가 sigmoid라면 입력이 (0,0)일 때 출력이 다른 수가 나오는 경우를 만족할 수 있을까? theta가 없다면 net은 입력이 (0,0)일 때 항상 0이다. 따라서 출력은 sigmoid 함수를 거쳐 항상 1/2 이 나온다. (0,0)의 입력에서 다른 출력이 나오게 하려면 sigmoid 함수를 output축(y축) 방향으로 움직일 수 있어야한다. 이 역할을 위해서 theta는 존재하는 것이다! net = x1*w1 + x2*x2 + theta , O = f(net) = f(theta) 이므로 theta 만큼 그래프를 net축으로 이동한 것처럼 생각할 수 있다. 왼쪽 기존의 sigmoid 함수에서 (0..

신경망의 원리 이제 multi-layer perceptron을 이용할 수 있게 되었지만, 정확히 구동시키기 위해 알아야할 것이 있다. 주어진 input을 가지고 계산을 해서 output을 낼 수는 있지만, output 값이 주어진 정답과 다를 때 학습하는 기능을 아직 구현하지 못했다. 여기서 학습이란 w값을 조정하는 것을 말한다. w값을 조정하는 학습을 하기 위해서는 랜덤, 조금씩 옮겨보기 의 방식보다는 미분을 사용하는 것이 좋다. w에 대한 Error의 미분값을 구한 후 반대쪽으로 일정치만큼 움직여야 하므로 Error를 w의 식으로 나타낼 수 있어야 하고, 조정되는 w의 값을 이와 관련하여 표현할 수 있어야한다. 결국 error를 줄이기 위해 w값을 조정하는 과정에 error에 대한 w의 미분값이 필요..

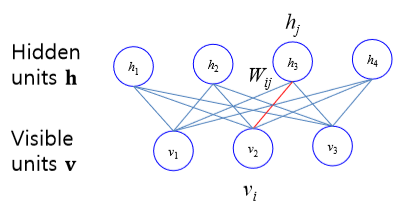

Restricted Boltzmann machine (RBM) 구성 두개의 층(가시층, 은닉층)으로 구성되어 있는 단일 신경망 심층 신뢰 신경망을 구성하는 요소로 쓰임 각 층의 노드는 다른 층의 노드와 모두 연결되어있고, 같은 층의 노드끼리는 연결x 식으로 계산하면 모든 입력노드를 이용한 식이 은닉층 노드의 값이 됨 대칭 이분 그래프 라고도 불림 비지도학습 학습 중 backward sweep 시 forward sweep 했을 때와 마찬가지로 층간 모든 노드의 연결을 통해서 값이 건너간다. 즉, 가시층의 값은 은닉층의 모든 값을 가중치를 곱해서 더한다. 그 결과 오차는 재구성한 값과 입력값의 차이이다. forward sweep w가 주어졌을 때 입력 v에 대한 은닉층 h의 조건부 확률 backward sw..

딥러닝의 시작 이러한 multi-layer 의 forward-propagation 과정을 식으로 나타내보면, h1 = f(x11*w11+x12*w21) net = h1*w13+h2*w23 = f(x11*w11+x12*w21)*w13+f(x11*w12+x12*w22)*w23 여기서 f 즉, activation fuction이 linear한 function이라고 가정해보자. 그렇다면 f(x) = ax의 형태이므로, net = x11*a(w11*w13+w12*w23)+x12*a(w21*w13+w22*w23) 으로 나타낼 수 있다. 그리고 이러한 net은 가장 처음에 주어진 input layer에다가 상수를 곱한 꼴이므로 one-layer로 나타낼 수 있다. 즉, 여러 개의 layer를 거쳤음에도 쉬운 문제로 ..

weight의 변화 1. 랜덤 실습 one-layer perceptron weight를 랜덤으로 학습하는 퍼셉트론 만들기 input, weight 갯수는 입력받기 output은 1개로 고정 /* 2020-01-28 W.HE one-layer perceptron */ #include #include #include main() { /* variable set */ int input_num; float* input; float* w; float output = 0; float answer = 3; int try_num = 0; /* input input_num */ printf("enter number of inputs\n"); scanf_s("%d", &input_num); /* memory allocat..

Comment